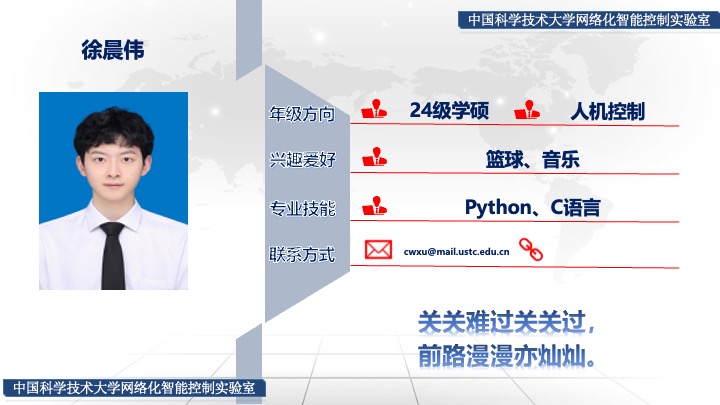

个人信息

参与实验室科研项目

基于强化学习的呼吸机参数智能精准控制技术研究

学术成果

共撰写/参与撰写专利 0 项,录用/发表论文 1 篇,投出待录用论文1篇。

Journal Articles

-

Human-in-the-Loop Reinforcement Learning with Risk-Aware Intervention and Imitation

Yaqing Zhou,

Yun-Bo Zhao ,

Chenwei Xu,

Chen Ouyang,

and Pengfei Li

Expert Systems with Applications

2026

[doi]

[pdf]